- Minds x Machines

- Posts

- The Peanut Butter Dad Problem: Why AI's Literal Mind is Your Next Big Headache

The Peanut Butter Dad Problem: Why AI's Literal Mind is Your Next Big Headache

From Chaos Sandwiches to Corporate Catastrophes—How AI’s Blind Obedience is Forcing a New Era of Precision

The Peanut Butter Dad Problem: Why AI's Literal Mind is Your Next Big Headache

The Peanut Butter Sandwich Fiasco

There's a video that captures our modern AI struggles a little too well. Peanut Butter Dad asks his kids to write instructions for making a peanut butter and jelly sandwich. Innocent enough. But dad follows their instructions literally.

Chaos ensues.

The kids write "put peanut butter on the bread," places the entire jar on top of the loaf. As they frantically improve their instructions (prompts), more carnage follows: “Open the peanut butter”? Peanut Butter Dad slams it against the counter like he’s in a fight with it.

Needless to say, Gordon Ramsay would be traumatised.

Peanut Butter Dad isn't being difficult. He’s just following the kids prompts—exactly.

And that’s AI in a nutshell (or sandwich): It executes what you say (write, not what you mean.

The Prediction Engine Behind AI

Understanding AI’s Literal Nature

Unlike humans, AI systems don't possess intuition, common sense, or the ability to read between the lines. They operate purely on the explicit instructions provided, processing language as data rather than meaning.

This literal behaviour stems from how AI processes information: it identifies patterns in text and predicts the most likely continuation based on training data, without truly "understanding" context the way humans do.

Inference: How AI Thinks

Understanding inference is crucial for anyone working with AI systems.

Inference is the process where the AI generates a response based on your input. It works by predicting the next word using patterns it learned during training.

This prediction-based approach explains both AI's remarkable capabilities and its fundamental limitations. AI can produce coherent, helpful responses because it has learned from vast amounts of human-written text.

However, it cannot verify facts, apply logical reasoning, or understand context the way humans do.

For businesses, this distinction is critical. AI systems excel at tasks requiring pattern recognition and text generation but require additional tools and safeguards for tasks requiring accuracy, logic, or real-world knowledge verification.

AI is a sophisticated prediction engine, not a reasoning system. Success comes from leveraging its pattern-matching strengths while compensating for its reasoning limitations.

The Great AI Personality Transplant: From Free Spirit to Corporate Drone

The Wild Days of “Helpful” AI

Early AI models like GPT-3.5 and Claude 2 operated under a simple directive: “You are a helpful assistant”.

They were like overeager interns: they’d guess, embellish, and improvise to please you. Vague prompt? No problem—they’d fill in the gaps with flair. This was gold for brainstorming or whipping up a quick birthday invite.

Reinforcement Learning from Human Feedback (RLHF) represents a crucial breakthrough in AI development, allowing systems to learn from human preferences rather than just text patterns. However, this training method inadvertently created some of the problematic behaviors we see in AI systems today.

When humans rated AI responses, they naturally preferred answers that seemed helpful and confident, even when those answers were speculative. This created a feedback loop where AI learned to prioritise appearing helpful over being accurate.

The messy peanut butter sandwich? That was part of the charm.

When you asked for a marketing plan without saying who it was for, the model guessed:

But when AI moved from fun entertainment to operational tool, this same behaviour became... problematic.

When “Helpful” Become Harmful.

The problem emerged the moment AI started doing more than writing poems. Suddenly, it wasn’t just creating content—it was used to make decisions.

Then came AI agents—systems that don't just generate text but take real actions: calling APIs, updating databases, triggering financial transactions. Suddenly, creative interpretation became operational liability, and margin of for error … basically zero.

Enterprises Want Grown-Up AI… and Crashed the Party

The economics spoke clearly. The real revenue opportunity wasn't in helping individuals write better birthday invitations—it was in enterprise applications.

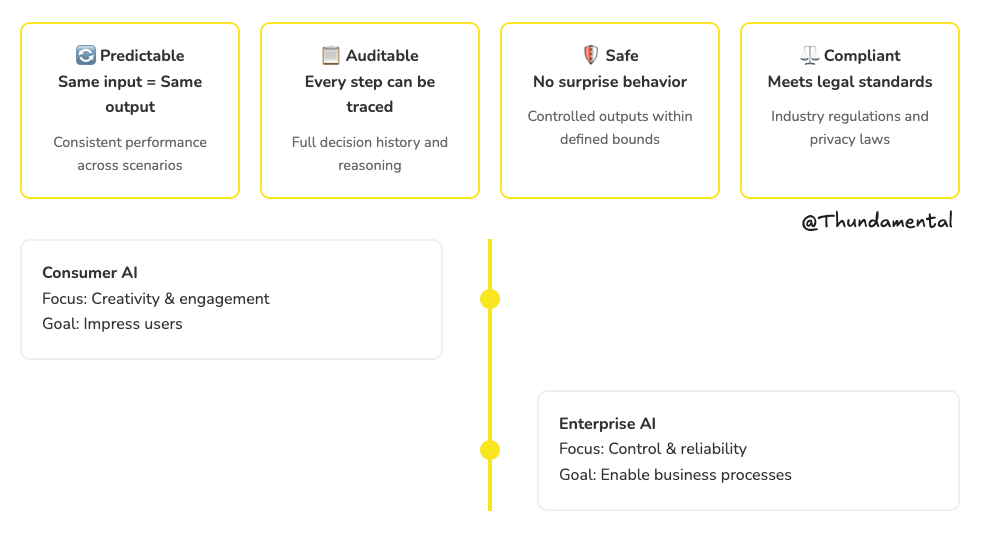

Once enterprises joined the party, the rules changed. They didn’t want inconsistent AI. They wanted compliant AI. Enterprise users weren’t asking for more creativity. They were begging for control.

Their requirements were specific and non-negotiable:

And the industry responded:

Literal-first models that ask clarifying questions instead of guessing

Steerability tools that let users control variability

Tool/Function-calling features so models query reliable tools instead of inventing answers

(We will discuss these in a future post)

AI had to get more obedient.

What This Means: AI is transitioning from a novelty that impresses to a utility that performs. The goal is no longer to amaze users but to consistently deliver value in professional contexts.

What This Means for You Today

Learning to work effectively with AI is no longer a futuristic skill—it's table stakes. Like Excel in the early 2000s or knowing how to write a coherent email without sounding like Clippy.

The age of magical thinking about AI is ending. The age of engineered AI systems has begun.

We’re entering the age of engineered systems, where output quality is a direct function of input design, context control, and systematic thinking.

This shift demands more than clever prompts. It demands Context engineering.

Context Engineering is the art of building dynamic systems that provide LLMs with precisely the right information, in the optimal format, at the appropriate time for any given task.

This is more than writing clever prompts—it’s about building information ecosystems that support AI behaviour reliably.

(Will also write about Context Engineering, and how it applies to your work).

This matters whether you're an AI engineer building enterprise systems or a business professional trying to get better outputs from commercial AI tools.

Like those increasingly frustrated kids in the peanut butter video, we're all learning to be more precise, explicit, and systematic in our communications with AI systems.

We can’t afford to treat AI as magic. It’s infrastructure now.

If we want reliable results, we need to design reliable systems—and that starts with building a workforce that speaks AI fluently.

You need to start building your AI skills NOW!

At Thundamental we help organisations become AI Fluent and develop Context Aware Solutions, with our AI Capability Model :

Thundamental offers:

Organisational AI literacy: Every team—from marketing to legal—needs to understand how to give AI systems instructions that don’t backfire.

Policy and governance: Clear frameworks for how AI can and shouldn’t be used, especially in regulated industries.

Training programs: Not just for engineers. For product managers, analysts, customer support, HR—anyone using AI to get things done.

Start Developing AI Fluency now.

👉 Schedule a free consultation and let’s get started.

If you forget everything else, remember this…

The age of “playing with AI” is ending. The age of working with it well is here.

Don’t want to miss our next newsletter? | Or, if you’re already a subscriber… |